The thing that matters

Of GenAI, software development and greed

If it has to be about something, it better be about humanity.

If I read through the voices I hear in the mobile software development I live in, I would read lines of humanity facing machines we do not understand, but, too often trust. Systems created to automate the world but incapable of reading it.

We, developers, created these systems. We know their internals. Yet, they still capture us. They often tie us to forced workflows. They tricked us into leaning into them, only to bite us back demonstrating the same unreliability and lack of skills that companies blame on their employees to justify cost cutting measures.

We build software for machines, but we are not machines. The more we interact with them, the more we learn to think like them. But those are just small mirrors into humanity reflected into a piece of metal.

But that’s not it. A representation of the inexplicable essence that generates humanity, I mean.

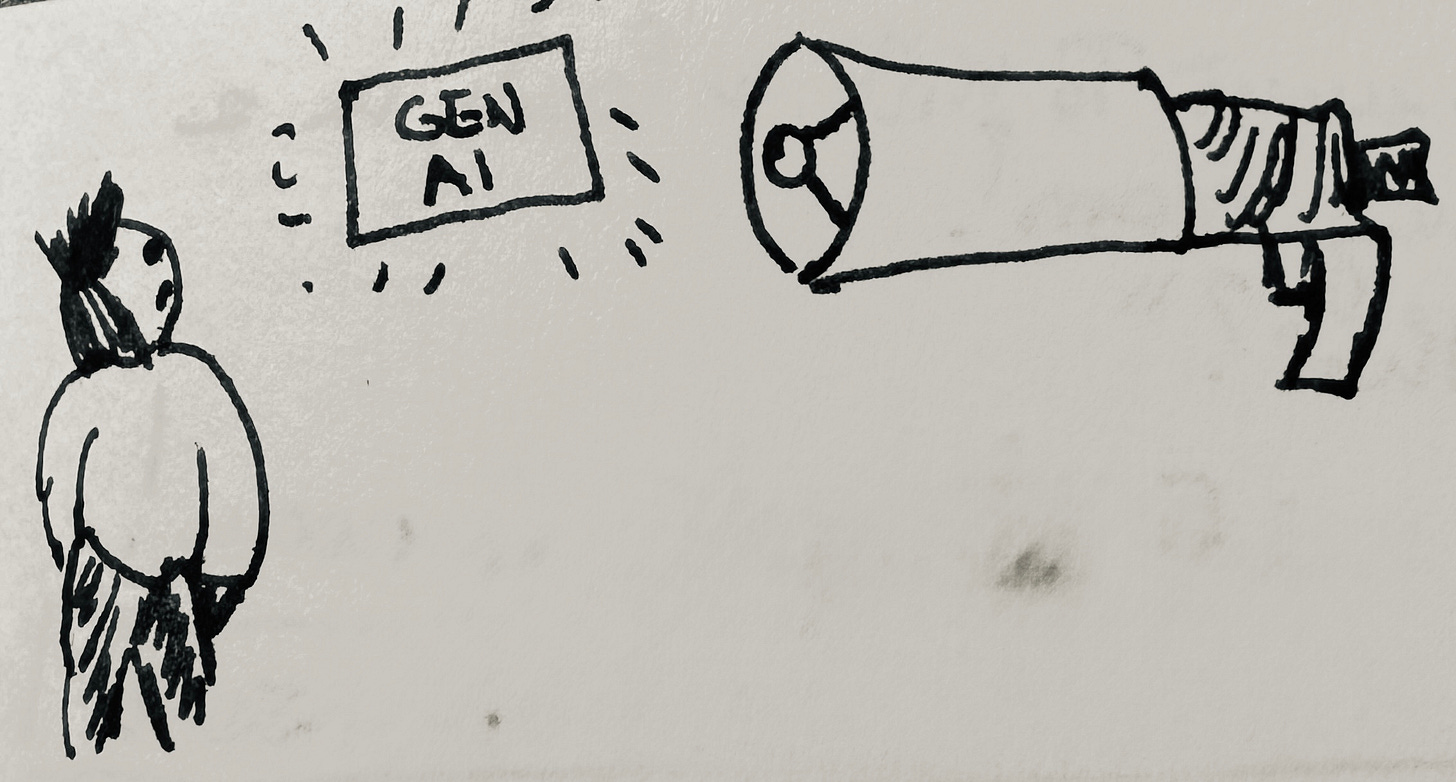

GenAI tools will replace developers? Then we will rediscover we are engineers. We solve problems. Controlling the LLMs. Because ultimately we are responsible for what the system does. Responsibility it’s where the game of trust is built.

Leaving these tools to roam free, will create damages. As professionals, we are still learning to tame them. We need to learn to sustainably and efficiently build software solutions, leveraging GenAI in the places and ways that makes most sense. Experiment safely, iterate, and evaluate.

Safety, quality, speed, costs. There are many aspects to juggle. LLMs cannot manage these properly. This is basic engineering1.

Engineering 101

We just need to rediscover it. We often forget it. GenAI is so disruptive that it caused to reveal our incapabilities to improve the development process in case we were given a powerful tool to code. But unfortunately for us, the tools is not that reliable. We need to learn where it actually shines and help us in a meaningful and cost-effective way. The other approaches should be stopped. The assumptions re-evaluated. Remember, these tools are meant to help us.

But how would you even know if they are helping you or not? Are you able to tell if your software development team is getting better at delivering value? How do you measure it?

But more importantly: were you measuring even before introducing GenAI tools? If you weren’t, how do you know it’s helping you?

Conceptual integrity

If your code should have a characteristics, I would say conceptual integrity is one. Having coherence in how concepts are defined and relate to each other. How to do that? GenAI can produce a lot of interesting content. But it struggles to guarantee long term coherence.

The code for now still needs to be understood by humans when things get messy. Software reflects the reality so it is often times messy. It is so also because most of us can’t manage to keep huge codebases to spiral into complete entropy.

GenAI has learned from us. It doesn’t know any better.

These tools are pushed onto us. The companies that push the narrative that predicts the disappearance of software developer jobs, make the same tools that other companies force developers to use. While we build apps for users that can’t get rid of their desire to look at the screen. We know it’s true because as developers we are the same and fall in the trap of doom scrolling. Infinite pages of infinite waste. Black holes of our times and part of our brain. The irony of building apps by day and be enchanted by them by night. Maybe all day long.

And it’s gonna get easier and easier, and more and more convenient, and more and more pleasurable, to be alone with images on a screen, given to us by people who do not love us but want our money.” - David Foster Wallace

So if it has to be about something, it better be about humanity then.

Writing the narrative

GenAI is a machine, fed with data from the Internet. From communities: Wikipedia, Reddit, public science, copyrighted material. What it spits out is influenced on what we wrote. We as humans.

Now GenAI tools can create tons of new synthetic data slop. That will inform the agents of the future, increasingly training on that synthetic data. What do we get out of that? If anything claimed by a machine is probably true but often is not, and in a eerily deceptively way sometimes. Dangerously wrong in a rush to write always more code. To model problems. Problems you hope the machine understands better than you. But you wouldn’t know any better, would you? How could you! Understanding takes time and if the machine can’t teach you fast enough, or you can’t make the machine teach you fast enough, where are we all going?

Should you still write and share on the Internet?

The answer should always be yes. But when and if this will cease to be true, the Internet would have lost a huge part of its humanity. We wanted to create and share knowledge, truth, and hope. Around the world. Not create fake data. Fake truths in the rush for more money.

What if we realise we forfeited our human part of Internet for a data machine generation? A machine that thinks it knows us, but it doesn’t. It lacks the fundamental humanity. The one I can’t describe. But often feel.

What’s a drop of human wisdom in a sea of AI slop knowledge? Probably not much. Unless it find its way to some other human parts of the system. To someone else far away which comprehends it because it resonates to past experiences. Someone that reads the humanity between the lines. The struggle that binds.

GenAI tools better learn from our best content. Our best ideas, questions, and dialogues. Maybe LLMs will learn to parrot back our best intentions. Spelling them out better than we could. We hope.

But should we listen to them? What life is one where the machine tells you how to live? How to feel? Tells you what to do? Forces you to do more, in a battle for speed over deep understanding of what’s around you.

What’s going to change?

Well something will. But not all changes will be good. We have a responsibility to control the GenAI impact on software development.

We can improve but disruptions can be costly on a long term. Power without control is a fool’s act. We need to govern these systems, not be powerless spectators to its foolish run for productivity.

We remain like waiting on the phone. Waiting for the next ring. For the next thing.

Someone is not responding. But who?

I acknowledge that actual engineers may find this obvious. The software industry is quite bad at remembering what matters.