Reasoning over AI & code reviews

Analysis of a team process dynamics when introducing AI tools and a keeping a mandatory code review step

This is an essay over a software development process problem. The case in study assumes a team that does code reviews before merging the code in the mainline branch. The review step is not negotiable, imagine for example due to a company wide mandate.

Developers mostly work in parallel on separate features. This leads developers to struggle with reviewing each other pull requests (PRs) because they lack context, signaling a problem of cognitive overload and excessive context switching.

We also assume that if there is a product group, it is able to support the development team by providing sufficient details and context to unblock development. In other words, developers know what needs to be done.

Considering such environment and allowing a fluctuation in efficiency of such system over time, imagine the scenario in which we introduce AI tools in the development process.

Why doing this?

Sector wide problem

Companies are rushing to introduce AI tools in their software development flow. These are now powerful tool that can generate a lot of code but this brings a dilemma:

the more code we produce the more we have to review. This immediately leads to the fork in the path:

keep the code review step

remove the code review step

This would have been a spicy question even before AI tools came into the picture. The generative power AI tools are pushing the limit of the software development processes that require a code review step while showing that this extra step originates from a source of lack of confidence in safely applying changes to software.

I read in The Pragmatic Engineers how some companies are moving away from Pull Requests and go towards something that is more focused on the intent and lets the AI do the rest (more or less). This is an approach that is being used in open source projects so it does not represent (yet) the reality of a lot of proprietary code that is currently being developed.

Personal Interest

I personally experienced this issue and I find it to be an interesting challenge. The key here is hold the assumption of the context to be true, meaning code review being mandatory. This is obviously a biased starting point but it forces to face reality where some things cannot be changed by everybody or at least not right away.

Practice, practice, practice

I often try to analyse a complex problem and model its dynamics in order to understand possible side effects if we apply any intervention. This scenario is complex because it involved different people constrained by a set of spoken and unspoken rules and assumptions. Learning to find and articulate them is vital when dealing with such complexity.

Analysing the effects

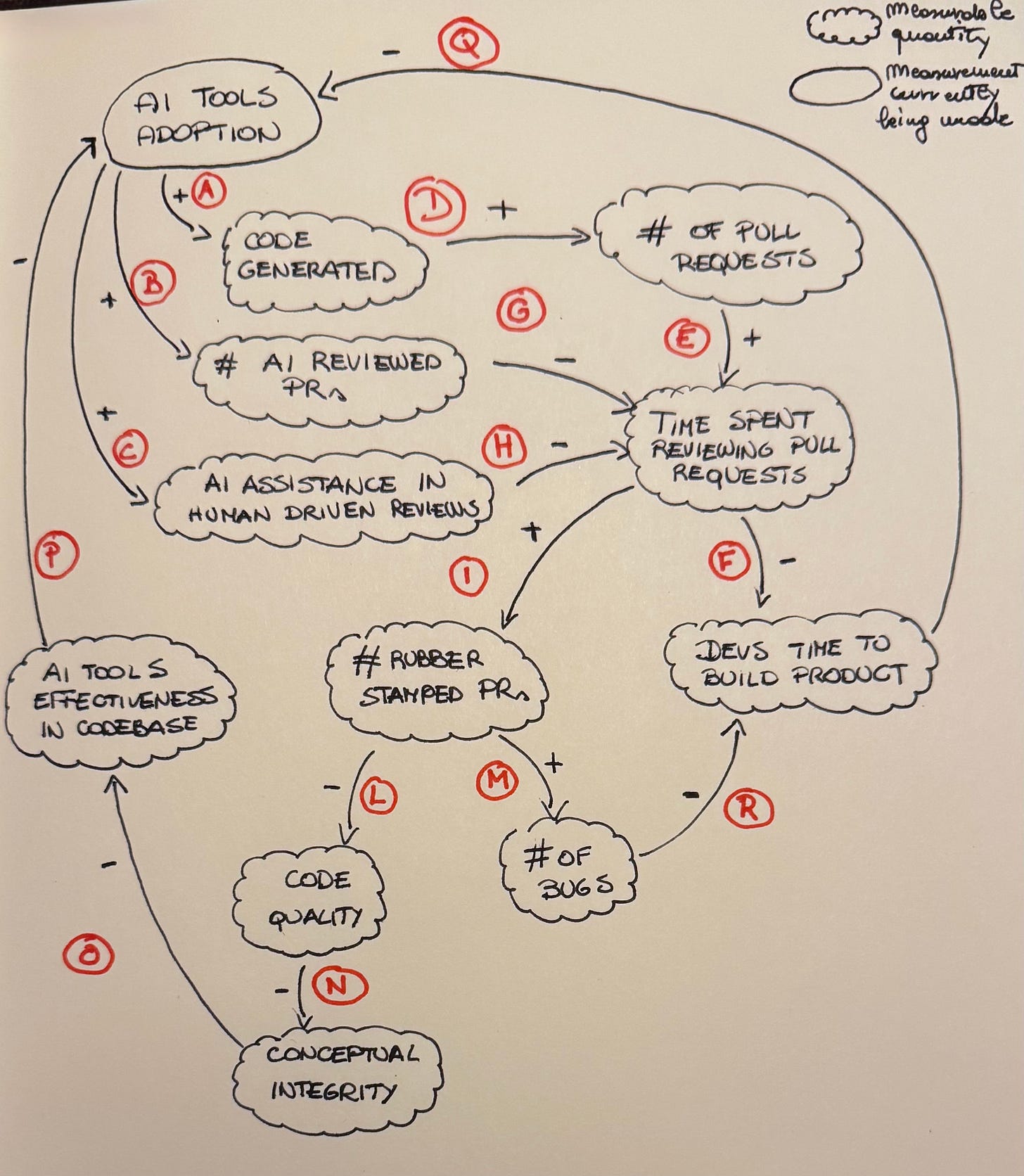

The following is a diagram of effect. It shows how certain measurements can create unexpected indirect effects. To be clear, the measurements themselves do not generally cause the issue but either by knowing the value or tracking it, people influence their behavior intentionally or unintentionally.

Effects can be positive (more of) or can be negative (less of). In the example below More adoption of AI tools leads to more code being generated. More time spent reviewing pull requests leads to less developers’s time to build the actual product.

Let’s start from the AI tools adoption initiative. It’s a measurement that is forming while being defined (elliptical shape) because it is a wide spectrum of different tools and approaches.

A - AI tools help generating more code

The effect is caused by the power of these AI tools to generate a lot of code very fast. This amount of power requires a lot of skills to be controlled and not end up flooding the codebase with messy and buggy code.

D - More code means more pull requests

Either more pull requests or bigger size. Or both. This increases the burden on the rest of the team. In the best scenario, if all the developers increase their output we can expect to have a similar increase in the burden on developers to review colleagues’s code.

E - More PRs leads to developers having to spend more time doing code review

All the code that gets generated has to be reviewed (per company policy) which means developers need to cut down development time to review others code.

B - AI can automatically review and approve changes

For certain simple and safe changes, AI tools can offer a first line of protection against regressions. If it is possible to define high level rules on which changes are safe, the work can be automated. More automation of reviews done by AI, less time devs will spend reviewing code (G).

C - AI tools allow to assist while reviewing code

AI can offer assistance when reviewing code because it can help answer developers’s questions. This assumes a human driven approach, meaning that a person needs to physically review the code and can’t delegate to an AI reviewer. This type of support helps saving time reviewing code (H).

I - More time spent reviewing code can lead to increase rubber stamping

Developers could lower their standards in attempt to keep up with the volume of changes, leading to PRs that give the illusion of safety but are just a rubber stamped approval process. This is not an automatic result but one can reasonably expect this to happen, especially considering how this can occur even without AI tools in the picture.

F - More time spent reviewing code leads to less time spent building product

The intuitive thing to think in this scenario is that you want developers to work on developing and improving the product as much as possible. After all that’s what many executives hope when pushing for introducing these tools.

Reviewing each other’s code, is not directly contributing to that. Whether you agree on the process of reviewing code or not, one can see how the time spent reviewing is subtracted from the time to build. In the end all the changes help, but individual contributors may lack the ability and incentive to see how reviewing other developers code help achieve their goals. In the context of a scenario where everybody is doing, for example, 30% more code changes, you will have 30% more code to review. That’s a big impact on time allocation and one that can impact the very same ability of producing more changes.

L - More rubber stamped PRs lead to lower code quality

AI slop is a thing if one leaves AI tools roam free or lacks the ability to keep them focus and coherent. If more code gets produced and more slop code slips in, we can expect a lower code quality. Why bother with code quality with AI? Good question. My short answer is because AI tools still work better with clarity than ambiguity. Code entropy has profound impact on the ability to deliver.

M - More rubber stamped PRs lead to more bugs

An intuitive effect the one that sees more code being shipped with poor review leading to an increase of bugs. To be fair, code reviews are helpful to catch bugs but are not the only nor the best practice. However, for the sake of this reasoning we assume that the rest of the things (e.g. team’s ability to test) remain similar even if enhanced with AI.

R - More bugs means less time to develop the product

Obviously if there are more bugs, developers will need to spend time fixing them instead of expanding the product.

N - Less code quality leads to less conceptual integrity

What is conceptual integrity?

Conceptual Integrity is achieved in a system when its central concepts harmoniously work together, striking a suitable balance between end-user features and nonfunctional requirements like maintainability and performance.

(Science Direct)

Fred Brooks, the author of the “Mythical man month” said:

I will contend that ConceptualIntegrity is the most important consideration in system design. It is better to have a system omit certain anomalous features and improvements, but to reflect one set of design ideas, than to have one that contains many good but independent and uncoordinated ideas.

The adoption of AI tools and the subsequent increase in code generated can destroy the cohesion of the system, trying to cram too many separate concepts together.

O - Less conceptual integrity leads to reduced effectiveness of AI tools

Reduced conceptual integrity leads to increase risk for hallucinations or imprecisions from AI tools operating on the code. When AI sees too many concepts which are not necessarily useful, it can easily get sidetracked and end up deteriorating even more the codebase conceptual integrity.

P - Less effective AI tools leads to reduced AI tools adoption

When introducing AI tool one has to assume they don’t necessarily lead to increase productivity therefore measuring the impact is critical. If it turns out that adopting them make the whole system less effective and efficient, the adoption should be stopped or adapted.

Q - Less developer time to build the product leads to less AI tools adoption

A funny effect of adopting AI tools can be the fact that developers will end up having less time to develop the product, reducing the adoption of AI tools. Doing more work leads to do less work. That would be a surprising paradox!

Conclusion

This analysis does not provide any solution to this problem. But a solution can arrive only once you have understood the complexity of the situation. This has helped me think through the implications of a lot of what is happening when introducing AI tools in software development. Ultimately this is a model of the problem therefore a simplification; the model is not important; what you learn by building it is.